Introduction

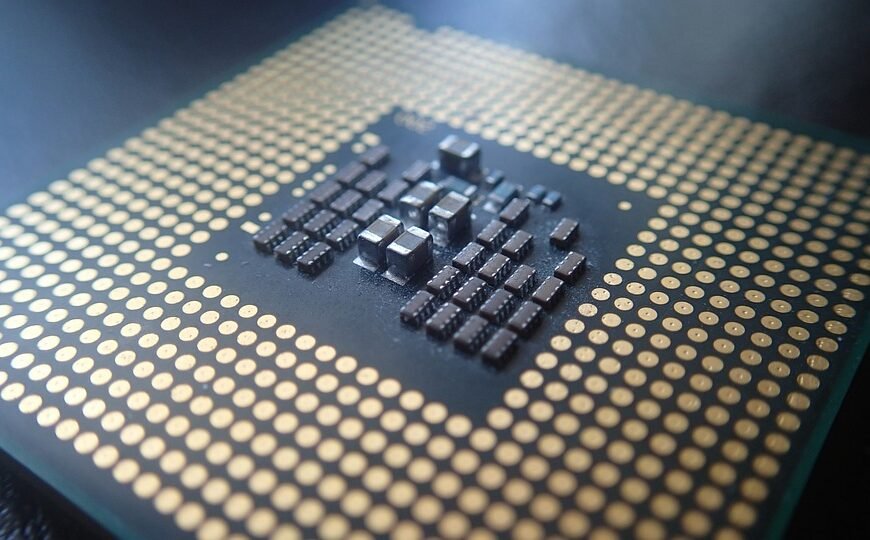

The Central Processing Unit (CPU) is often referred to as the brain of a computer. Its role is critical in performing calculations, making decisions, and executing instructions. As technology has advanced, the architecture, performance, and functionality of CPUs have evolved significantly. This article explores the captivating journey of CPUs, starting from the earliest models, advancing through groundbreaking technological innovations, and looking ahead to promising future trends.

I. Early Models: A Glimpse into the Past

The history of CPUs dates back to the early 1940s with the invention of the first electronic computers. Before CPUs, computers used vacuum tubes to process data. The first truly programmable computer, the Electronic Numerical Integrator and Computer (ENIAC), was completed in 1945. Although ENIAC did not have a CPU in today’s sense, it laid the groundwork for future designs.

A. The First Generation: Vacuum Tubes to Transistors

The shift from vacuum tubes to transistors in the late 1950s marked the true birth of CPUs. The transistor’s advantages, including size, reliability, and efficiency, enabled more circuits to fit on a single chip. Early transistorized computers, such as the IBM 1401, offered a significant increase in processing power and efficiency.

B. Integrated Circuits: A New Horizon

In the 1960s, engineers began to integrate multiple transistors onto a single semiconductor chip, leading to the creation of integrated circuits (ICs). This advancement drastically reduced the size of computers and made them more accessible. Intel’s 4004, released in 1971, is often considered the first commercially available microprocessor, capable of running complete programs. It housed 2,300 transistors and could perform roughly 60,000 operations per second.

C. The 808x Series: The Rise of Personal Computing

Intel’s 8080, introduced in 1974, became instrumental in the personal computing revolution. The 8080 had an 8-bit architecture and featured 6,000 transistors, rendering it much more powerful than its predecessors. This model spurred a wave of innovation and competition among manufacturers, leading to the emergence of various rival architectures, including Zilog’s Z80.

II. The Golden Age of CPUs: 1980s to the 1990s

A. The 16-bit and 32-bit Era

With the advent of the 16-bit architecture in the late 1970s and early 1980s (notably the Intel 8086), computing power expanded dramatically. The transition to 32-bit CPUs in the late 1980s, exemplified by Intel’s 80386 (released in 1985), permitted enhanced memory addressing and multitasking capabilities. These advancements made personal computers more practical for both consumers and businesses.

B. RISC vs. CISC Architectures

During the 1980s, the debate between Reduced Instruction Set Computing (RISC) and Complex Instruction Set Computing (CISC) became prominent. RISC architectures, as popularized by ARM and MIPS, streamlined instruction sets to maximize performance and efficiency. In contrast, CISC architectures like x86 (Intel) focused on multi-faceted instruction sets, allowing for complex operations in fewer cycles.

C. The Pentium Revolution

Intel’s Pentium series, launched in 1994, solidified the company’s dominance. Featuring advanced pipelining, branch prediction, and improved bus architecture, Pentiums set the standard for computing performance in the 1990s. The introduction of the Pentium Pro later added a focus on high-performance server applications, propelling Intel’s technological advancements further.

III. The Modern Era of CPUs: 2000s to Present

A. Multi-core Processors

The introduction of multi-core processors in the early 2000s marked a significant shift in CPU design. Rather than solely enhancing clock speeds, manufacturers focused on increasing the number of cores. AMD’s Athlon 64 X2 and Intel’s Core Duo were among the pioneering models. This architecture allowed for genuine parallel processing, enabling tasks to be executed simultaneously, vastly improving performance for multi-threaded applications.

B. The Emergence of Mobile SoCs

As mobile devices gained popularity, manufacturers began to develop System on Chips (SoCs) capable of integrating multiple functions into a single chip. ARM architecture became the de facto standard for smartphones and tablets, powering devices with impressive performance while optimizing energy consumption. Apple’s A-series Bionic chips, Qualcomm’s Snapdragon series, and Samsung’s Exynos chips illustrate the rapid advancements in mobile processing technology.

C. Specialized Processors and AI Acceleration

In recent years, the rise of data science, machine learning, and artificial intelligence has led to the development of specialized processors. Graphics Processing Units (GPUs) from companies like NVIDIA and AMD have evolved to perform parallel processing more efficiently than traditional CPUs for specific tasks. Furthermore, Tensor Processing Units (TPUs) designed by Google specifically target machine learning workloads, demonstrating the importance of tailored architectures for modern computing challenges.

IV. Industry Insights and Technical Innovations

A. Moore’s Law and Its Implications

Moore’s Law, formulated by Gordon Moore in 1965, predicts that the number of transistors on a chip would double approximately every two years. This observation has driven the semiconductor industry to pursue ever smaller and more complex transistors. However, as transistors become smaller, physical and financial limitations are starting to challenge this trajectory, making it necessary for manufacturers to innovate beyond mere scaling.

B. 3D Chip Technology

To address limitations imposed by traditional planar chip designs, researchers have turned to 3D chip technology, wherein layers of silicon are stacked vertically. This configuration not only reduces distance for interconnections but significantly enhances performance and energy efficiency. Companies like Intel and TSMC are investing heavily in 3D technologies to future-proof their production capabilities.

C. Quantum Computing

As we look toward the future, quantum computing represents a transformative leap in processing capabilities. Unlike classical CPUs, which utilize bits (0s and 1s), quantum computers employ qubits, enabling them to perform complex calculations at speeds unattainable by traditional methods. Companies such as IBM, Google, and startups like Rigetti are advancing quantum computing research, aiming to solve problems intractable for classical computers.

V. The Future Outlook: What’s Next for CPUs?

As we look ahead, several key trends will likely shape the future of CPUs:

A. Heterogeneous Computing

The integration of various specialized computing units, such as CPUs, GPUs, and AI accelerators, into a single system is on the rise, a trend known as heterogeneous computing. This approach allows for optimizing performance by allocating tasks to the most suitable processor, reducing bottlenecks and improving efficiency.

B. Energy Efficiency and Sustainability

With the increasing demand for processing power, energy efficiency remains a crucial focus for CPU manufacturers. The industry is committed to developing chips that not only deliver high performance but do so sustainably. Innovations such as dynamic voltage and frequency scaling (DVFS) and low-power state architectures will play pivotal roles in achieving these goals.

C. The Role of AI in CPU Design

Artificial intelligence will likely revolutionize CPU design, automating the architecture optimization process. Machine learning algorithms can analyze performance benchmarks and adjust designs to maximize efficiency, a field that’s still in its infancy but promises significant advancements.

D. Evolving Workloads

As data generation grows exponentially, CPUs will need to adapt to support workloads from cloud computing, IoT devices, and edge computing. Architectures must evolve to ensure they can handle the influx of data and the demand for real-time processing, which will push developers to innovate continually.

Conclusion

To trace the evolution of CPUs is to follow the trajectory of modern computing itself. From the rudimentary vacuum tubes of the early electronic computers to today’s highly specialized and efficient processors, the journey illustrates not only technological advancements but also a relentless pursuit of innovation. As we stand on the brink of a new era of computing characterized by multi-core processing, mobile SoCs, and even quantum capabilities, it is clear that the CPU’s evolution will continue to shape how we interact with technology.

The future holds exciting prospects, including heterogeneous computing, AI-driven designs, and energy-efficient architectures that could redefine our computational landscape. In many respects, the CPU’s journey is far from over; it remains a pivotal component in our increasingly digital world, constantly evolving to meet our future needs. As innovations continue to unfold, they will not only transform data processing but also redefine the very possibilities of computation itself, paving the way for advancements we can only begin to imagine.